Get Hands on - The Dataset

About the Data

Previously, there wasn't really any existing datasets that were easy to get started with when you are a noob to semantic segmentation. The existing datasets are hundreds of MB to download. They involve quite a bit of preprocessing to get them in a usable format for a deep learning pipeline. It is also hard to get a very basic model to work well on them. In order to get noticeably nice results, they will usually require models that use up a lot of computational resources (due to a large number of trainable parameters), or, models that are computationally efficient, but architecturally more complex for a beginner to understand. As such, it is difficult for new-comers to get started with segmentation.

There really needed to be an MNIST equivalent dataset, but for segmentation. Something that would be simple to get started with, even with a basic model, and without requiring GPUs or cloud computing.

As such, I have created a new dataset that is just a tiny downloadable file of 2.5MB, However, despite its tiny size, it contains more training samples than most segmentation datasets. The images used are so basic, that you can get noticeable results with just a very simple model, and be able to run it easily on a medium to low spec laptop.

The dataset is called the SiSI (pronounced "see see") dataset, which stands for Simple Segmentatino Image Dataset. The code that was used to generate it is hosted on this Github repo. Feel free to explore that repo if you wish to generate more training samples of your own. However, we will be downloading a prepared version of the data for this tutorial

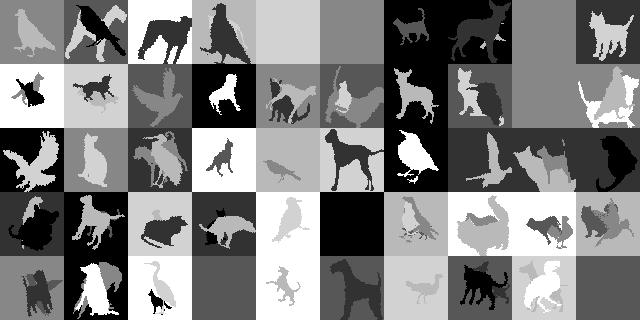

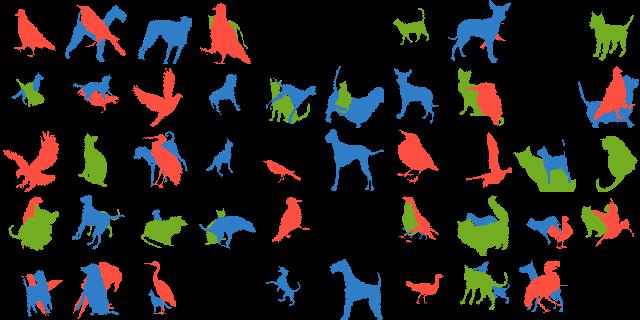

The inputs are greyscale images of the silhouettes of cats, dogs, and birds. The task is to correctly identify which regions of the image belong to each of the three different animals. There is actually also a 4th class, null, for regions that contain none of the other three objects. A sample of the input images and corresponding visualization of the segmentation labels is shown below.

Getting and exploring the data

You can download the zipped up data from this data link, or you can download it and extract it using the command line as follows (on Linux):

wget -c https://raw.githubusercontent.com/ronrest/sisi_dataset_pickles/master/data64_flat_grey.pickle.zip unzip data64_flat_grey.pickle.zip

It is only 2.5 MB to download, but once extracted, you get a file called data64_flat_grey.pickle that is about 75MB. It is a pickle file that contains a python dictionary.

You can load up the pickle file in python by running:

pickle_file = "data64_flat_grey.pickle" with open(pickle_file, mode = "rb") as fileObj: data = pickle.load(fileObj)

The data is actually just a python dictionary that contains numpy arrays for training, validation and testing. You can explore the dimensions of the data by running:

print("DATA SHAPES") print("- X_train: ", data["X_train"].shape) print("- Y_train: ", data["Y_train"].shape) print("- X_valid: ", data["X_valid"].shape) print("- Y_valid: ", data["Y_valid"].shape) print("- X_test : ", data["X_test"].shape) print("- Y_test : ", data["Y_test"].shape)

[OUTPUT] DATA SHAPES - X_train: (5120, 64, 64, 1) - Y_train: (5120, 64, 64) - X_valid: (1024, 64, 64, 1) - Y_valid: (1024, 64, 64) - X_test : (1024, 64, 64, 1) - Y_test : (1024, 64, 64)